ELVIS: Enhance Low-Light for Video Instance Segmentation in the Dark

Funders: MyWorld Strength in Places

Abstract

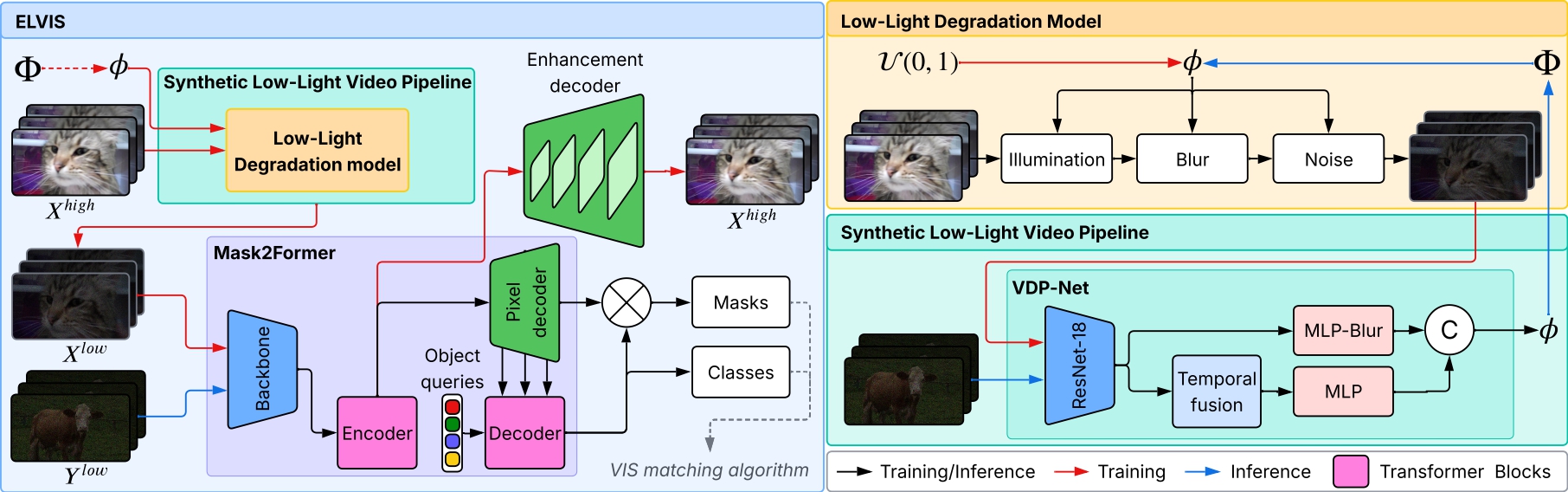

Video instance segmentation (VIS) for low-light content remains highly challenging for both humans and machines alike, due to adverse imaging conditions including noise, blur and low-contrast. The lack of large-scale annotated datasets and the limitations of current synthetic pipelines, particularly in modeling temporal degradations, further hinder progress. Moreover, existing VIS methods are not robust to the degradations found in low-light videos and, as a result, perform poorly even when finetuned on low-light data. In this paper, we introduce ELVIS (Enhance Low-light for Video Instance Segmentation), a novel framework that enables effective domain adaptation of state-of-the-art VIS models to low-light scenarios. ELVIS comprises an unsupervised synthetic low-light video pipeline that models both spatial and temporal degradations, a calibration-free degradation profile synthesis network (VDP-Net) and an enhancement decoder head that disentangles degradations from content features. ELVIS improves performances by up to +3.7AP on the synthetic low-light YouTube-VIS 2019 dataset.

Video

Citation

If you use our work in your research, please cite using the following BibTeX entry:

@article{lin2025elvis,

author={Lin, Joanne and Lin, Ruirui and Li, Yini and Bull, David and Anantrasirichai, Nantheera},

title={ELVIS: Enhance Low-Light for Video Instance Segmentation in the Dark},

year={2025},

publisher={arXiv}

}